abbreviation

Area under the curve (Area Under the Curve)

Area under the Receiver Operating Characteristic curve

Most of the time, AUC refers to AUROC, which is a bad practice. As Marc Claesen pointed out, AUC is ambiguous (probably any curve), and AUROC is not ambiguous.

AUROC explanation

AUROC has some equivalent explanations:

Uniformly extracted random positive samples ranked before expectations of uniformly drawn random negative samples

The expected proportion of positive samples ranked before randomized random negative samples

If the ranking is segmented before a randomly selected random negative sample, the expected true positive rate

Negative samples are ranked after a uniformly extracted random positive sample and expected ratio

If the ranking is segmented after a uniformly extracted random positive sample, the expected false positive rate

Read more: How to derive the probabilistic interpretation of AUROC (https://stats.stackexchange.com/questions/180638/how-to-derive-the-probabilistic-interpretation-of-the-auc/277721#277721)

AUROC calculation

Suppose we have a probability binary classifier, such as logistic regression.

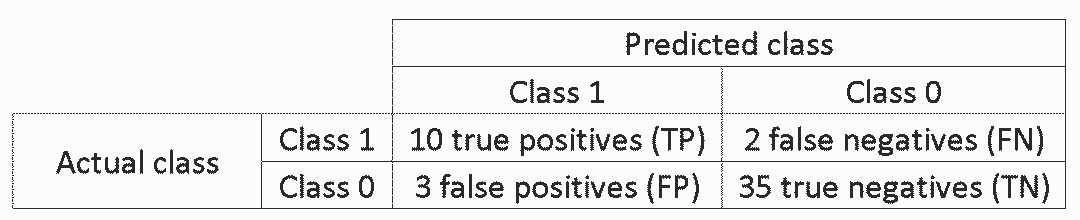

Before discussing the ROC curve (receiver operating characteristic curve), we need to understand the concept of the confusion matrix . A binary prediction may have 4 results:

We predict 0, and the real category is 0: This is called True Negative , ie we correctly predict that the category is negative (0). For example, anti-virus software does not identify a harmless file as a virus.

We predict 0, and the real category is 1: This is called False Negative , ie, our misprediction category is negative (0). For example, antivirus software does not recognize a virus.

We predict 1 and the real category is 0: This is called False Positive , ie, our mispredicted category is positive (1). For example, anti-virus software identifies a harmless file as a virus.

We predict 1 and the real category is 1: This is called True Positive , ie we correctly predict the category to be positive (1). For example, antivirus software correctly identifies a virus.

The predictions made by our statistical model, and how many times each of these four results appears, we can get the confusion matrix:

In the above confusion matrix example, of the 50 data points classified, 45 classifications are correct and 5 classification errors.

When comparing two different models, it is often more convenient to use a single indicator than to use multiple indicators. Below we calculate two indicators based on the confusion matrix, and then we will combine the two indicators into one:

True positive rate (TPR) , ie sensitivity, hit rate, recall, is defined as TP/(TP+FN). Intuitively, this indicator corresponds to the proportion of positive data points that are correctly identified as positive for all positive data points. In other words, the higher the TPR, the fewer positive data points we miss.

The false positive rate (FPR) , that is, the false detection rate, is defined as FP/(FP+TN). Intuitively, this indicator corresponds to the proportion of negative data points that are mistaken for positives to all negative data points. In other words, the higher the FPR, the more negative data points we misclassify.

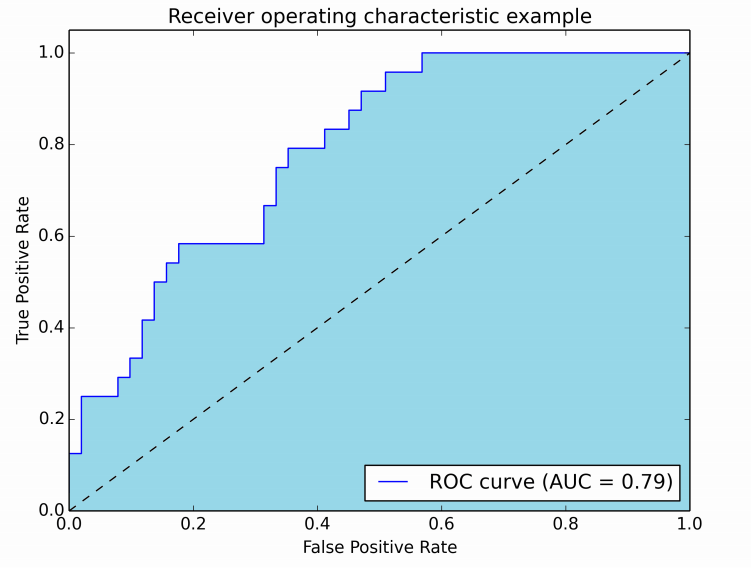

In order to combine FPR and TPR into one indicator, we first calculate the logistic regression of the first two indicators based on different thresholds (for example: 0.00; 0.01, 0.02, ..., 1.00), and then draw them as an image, where the FPR value is On the horizontal axis, the TPR value is the vertical axis. The curve obtained is the ROC curve, and the indicator we consider is the AUC of the curve, called AUROC.

The image below shows the image of AUROC:

In the above figure, the blue area corresponds to the receiver operating characteristic curve (AUROC). The diagonal dotted line is the ROC curve of the random predictor: AUROC is 0.5. The random predictor is usually used as a baseline to test whether the model is useful.

If you want to get some first-hand experience:

Python: http://scikit-learn.org/stable/auto_examples/model_selection/plot_roc.html

MATLAB: http://

Stainless Steel Shaped Line,Surgical Steel Wire,Flux Core Stainless Steel Mig Wire,Stainless Steel Tig Welding Rods

ShenZhen Haofa Metal Precision Parts Technology Co., Ltd. , https://www.haofametals.com