Abstract: This paper proposes a multi-source remote sensing image curvelet transform fusion method based on neighborhood matching degree and energy weighted average. The low-resolution multi-spectral image and the high-resolution panchromatic image are subjected to curvelet transform, and the low-frequency component of the fused image is taken from the low-frequency component of the multi-spectral image. Find the matching degree in the neighborhood of the high-frequency coefficients of the two images. Compared with the threshold, the high-frequency coefficients are determined by different methods according to the comparison results. The fusion image is obtained by performing inverse curve transformation on the generation coefficient. Experiments show that the image sharpness and spectral properties after fusion are effectively improved.

This article refers to the address: http://

Key words: image fusion; curvelet transform; neighborhood energy; matching

Curvelet transform can effectively describe high-dimensional signals with curve or hyperplane singularity. After an N-scale curvelet transform on an image, the first layer is the low-frequency coefficient, which represents the contour information of the image; the N-th layer is the high-frequency coefficient, which reflects the details and edge features of the image. The 2~N-1 layer is a medium-high frequency coefficient layer, which contains edge features of image elements and has multi-directionality. The curvelet transform fusion of remote sensing images generally adopts pixel-based fusion rules: coarse-scale coefficients use coarse-scale coefficients of multi-spectral images, and fine-scale coefficients use multi-spectral images and panchromatic coefficients to transform large-scale coefficients with larger modulus values. When designing the fusion rules, it is necessary to base on the neighborhood and investigate the statistical characteristics in the neighborhood, which can effectively improve the quality of the fusion.

1 Fusion method based on curvelet transform

Curvelet inherits and develops the excellent spatial and frequency domain local characteristics of wavelet analysis. It is a new multi-scale transform analysis tool. Its advantage over wavelet is that it is more suitable for describing the geometric features of images, and is more suitable for extracting detailed information of images. This is because the wavelet uses a "block base", which often causes a wrap around when approaching the edge, blurring the edges: the curvelet uses a "wedge-shaped" approximation, and the biggest difference from the wavelet is that it has an arbitrary angle. The directionality, unlike wavelets, has only three directions of horizontal, vertical, and diagonal, so it is anisotropic. In the wedge block, the larger curvelet coefficient is obtained only when the approximation base overlaps with the singularity feature, that is, the direction matches the geometry of the singularity feature. In addition, curvelet has better sparse expression ability than wavelet, which can represent the edges of images, such as curves and line features, with fewer large curvelet transform coefficients, overcoming the important features of wavelet transform to multiple scales. The disadvantage is that the energy is more concentrated after the transformation, which is more conducive to tracking and analyzing the important features of the image. Therefore, by introducing the curvelet transform into image fusion, the eurvelet analysis can be used to better extract the features of the original image and provide more information for the fused image.

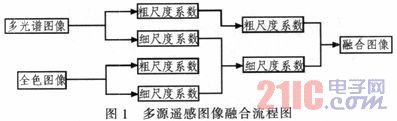

The basic steps of integration are:

1) Performing a curvelet transform on the source image to obtain curvelet coefficients at different resolution levels, including a coarse layer, a detail layer, and a fine layer;

2) According to the purpose of the fusion application, different fusion rules are adopted for different scale layers. The fusion rules generally adopted for the curvature-wave transform fusion of remote sensing images are: the coarse-scale coefficients adopt the coarse-scale coefficients of the multi-spectral image, and the fine-scale coefficients adopt the fine-scale coefficients with large absolute values ​​of the modulus;

3) Performing a curved wave inverse transform on the fused curve coefficients of each layer to obtain a fused image.

The integration process is shown in Figure 1.

The fusion method in step 2 is based on a single pixel fusion method. Multi-source remote sensing images have different imaging modes for multi-spectral images in different bands, and the spectral features are different, the correlation is poor, and the precise registration is difficult. The single-pixel fusion method does not consider the region information, and is not suitable for the fusion between the multi-source remote sensing images with the above characteristics. Therefore, when designing the fusion rule, it is based on the neighborhood, considering the statistical characteristics and matching degree of the region, according to the neighboring The matching degree of the domain is classified into the high-frequency coefficient extraction method, and the weighting coefficient is determined by the neighborhood statistical characteristics of the two images.

2 Based on neighborhood energy and matching degree fusion rule design

The statistical characteristics of the region mainly include energy, variance and gradient. The energy is expressed by the square of the curvelet coefficient. After the curvelet transform, the energy is mainly concentrated on the low-frequency coefficient, and the energy of the high-frequency coefficient decreases with the number of transform layers. The degree of matching characterizes the degree of correlation of different image regions. In the literature, the pixel gray scale is directly weighted according to the matching degree in the neighborhood, and the fused image is used as the image source of the second fusion. In this paper, the curvelet transform is introduced into the above process, and the matching degree of the neighborhood is considered in the curvelet transform domain. Different high frequency coefficient extraction methods are adopted according to the matching degree of the neighborhood, and the neighborhood energy of the two images is used in the weighting process. Determine the weighting factor.

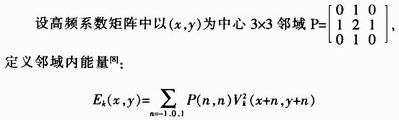

V(x, y) represents the value of the point (x, y) in the coefficient matrix; subscript k = M, H or F, M represents a multispectral image, H represents a high resolution image, and F represents a fused image. Define the matching degree in the neighborhood of the multispectral image and the high-resolution image curvelet transform high-frequency coefficient matrix:

![]()

When the high-resolution panchromatic image and the low-resolution multi-spectral image are blended by the curvelet transform, the coefficient value is selected for the center point according to the degree of matching. If the matching degree is smaller than the threshold λ, it indicates that the spatial characteristics of the two images are different at the position, so the central pixel of the region with the larger energy is the pixel of the fused image; if the matching degree is greater than the threshold λ, the two images have stronger For correlation, weighting is performed. The weight corresponding to the high frequency coefficient of the neighborhood energy:

Kmin=0.5-0.5(1-MC)/(1-λ)

The weight corresponding to the high frequency coefficient of the neighborhood energy:

Kmax=1-Kmin

The selection rules for high frequency coefficients are:

In the curvelet transform fusion, the neighborhood energy and matching degree fusion rules are designed as follows:

1) The coarse layer is the coarse-scale coefficient of the multi-spectral image;

2) The detail layer and the fine layer. That is, the middle and high frequency components and the high frequency components adopt the selection rule of the above high frequency coefficients.

3 experiment and analysis of results

The high spatial resolution full-color image reflects the spatial structure information, and can fully describe the features such as the details of the feature, but the spectral resolution is low, and the spectral information of the feature cannot be well described. The multi-spectral image with low spatial resolution is rich in spectral information and plays an important role in the recognition and interpretation of features. If the multi-spectral image and the full-color image are fused, the spectral characteristics of the multi-spectral image can be improved while maintaining the spectral characteristics, so as to improve the subsequent processing effect and meet different application requirements. The experimental data comes from the QuickBird satellite, and the high-resolution image is its full-color band image. As shown in Figure 3, the multi-spectral image of the RGB 3 bands of the satellite is combined into a color image, as shown in Figure 4. The influence of the threshold setting on the fusion effect is compared with the three-group fusion experiment based on the single-pixel fusion rule. The number of curvelet transform layers is set to 5 layers.

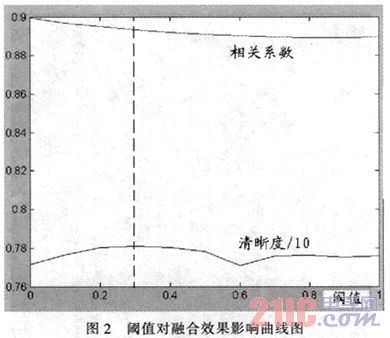

Figure 2 shows the influence of the threshold value λ on the fusion effect. The horizontal axis is λ, the value is 0.0-1.0, and the vertical axis is the correlation coefficient and one-tenth resolution. The previous curve indicates the correlation. Coefficient, the next curve in the graph represents one tenth of the value of the sharpness. It can be seen from the figure that as the λ increases, the correlation coefficient decreases, but the magnitude of the change is not large. The sharpness curve forms a peak at λ=0.3, and a trough is formed at λ=0.6, which varies greatly. Considering comprehensively, the sharpness and correlation coefficient get better values ​​when λ=0.3.

The measurement of the fusion effect mainly considers two aspects. One is the degree of performance of the spatial detail information of the image, such as information entropy, variance and sharpness. The other is the retention of spectral characteristics of the image, such as the deviation index and correlation coefficient. Information entropy characterizes how much information an image contains. Sharpness refers to the clarity of the image. It reflects the slight detail contrast and texture feature changes in the image. The higher the resolution, the better the fusion effect. The deviation index indicates the degree of deviation between the fused image and the source image. The smaller the deviation index is, the closer the two images are. In the multi-source remote sensing image fusion, the deviation index is used to compare the deviation degree between the fused image and the multi-spectral image. The deviation index is more Small, the smaller the deviation between the fused image and the multi-spectral image, the better the spectral information is, and the better the fusion effect. The correlation coefficient is used to measure the degree of correlation between the fused image and the multi-spectral image. The correlation coefficient is close to 1, indicating that the closer the two images are, the better the fusion effect is.

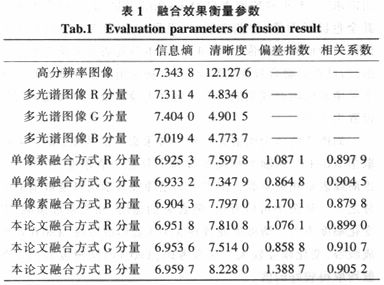

The full-color image is combined with the multi-spectral image by a 5-layer curvelet transform, and the value of the fusion rule is 0.3. The fused image obtained based on the single-pixel fusion rule is shown in Fig. 5. The fused image obtained by the fusion rule in Fig. 6 is shown in Fig. 6. The fusion effect parameters are shown in Table 1. Compared with the single pixel fusion method, the fusion image of the multi-spectral image and the full-color image of the three channels of SRGB is improved in terms of information entropy, sharpness and correlation coefficient, and the deviation index. Decrease, the fusion effect is better from the parameters. From a visual point of view, Figure 4 is not as clear as Figure 5, there is fog. The fusion rules designed in this paper are obviously superior to the single pixel-based fusion rules.

4 Conclusion

In this paper, the image is often correlated among the pixels in a particular local neighborhood, and the concept of matching degree in the neighborhood is introduced. The neighborhood matching degree and energy weighted average fusion method based on curvelet transform are proposed, and the implementation method is given. The experimental results show that this method has advantages over single pixel fusion mode in maintaining spectral characteristics and improving sharpness.

Furniture Aluminum Alloy Die Casting

Furniture Aluminum Alloy Die Casting,Aluminium Section Die Casting,Door Frame Die Casting,Handle Bathroom Die Casting

Dongguan Metalwork Technology Co., LTD. , https://www.dgdiecastpro.com